It seems that I am not alone in my "Architects Rant". Here's another rant by the Secret Geek (this guy publishes some great articles, and also co-produced TimeSnapper).

A little disclaimer against rants such as this: a good architect is worth his/her weight in gold. The problem is that architects who are just "architects" tend to offer little practical value to a project. The best teams I have worked for don't have pure architects per say, they have one or more technical leads who design the system, and make the calls on refactoring, etc. And when tech leads can't unanimously decide on an approach, the project manager or delivery manager steps in and adjudicates. Works for me!

Tuesday, April 7, 2009

Monday, April 6, 2009

Versioning of Web Services

I was asked an interesting question the other day: how do I handle the versioning of web services? It was a hard question to answer (the person who asked me is a delivery manager of a large bank, and openly admitted that he has no real strategy as of yet either).

Incidentally, I also had struggled with another question a year or two ago, which was simply how do I handle versioning of assemblies in .Net. That gets tricky too. And as for handling of different jar versions in the Java world, well that's just a nightmare. Use Ivy I guess!

Web Services 101

- Whack up a wsdl (or generate one from existing classes)

- Implement the service based on the wsdl interface

- Publish the service in some way (UDDI, tell your friends, use twitter.com....)

- Clients subscribe to the service via the wsdl

- Clients produce their own client side proxies

- Clients call web methods, and the server returns the results

To me, when you use web services in their full glory, you effectively have just another API, albeit a facade for a remote server somewhere. So, all the challenges of versioning come into play.

And Then a Change is Made

So what happens when a client wants a new feature, a different result to be returned, etc. Well, the web service implementation is updated, and perhaps so too is the wsdl (particularly if this is a breaking change).

But, what happens when the implementation of the service changes in a non-breaking way? Do we still tell the world that a new version of the web service exists? Or do we keep this secret?

When a new version of a web service is produced (for whatever reason), there are two sides to consider: client side and server sided. Yes, we need to implement the actual implementation of the new version, but the clients will require updating too.

Changing one web service client application is not too hard. But what happens when you have 100 different clients all calling the same application, and only some of them want the change?

Luckily, in the .Net world, when a web service is changed, as long as the changes do not break the contract, then clients do not require updating.

In the Java world, I have been told that the client side app will have to be recompiled based on the new wsdl (I guess Java web service implementations do a full regeneration of the wsdl, and clients always do an xsd validation against the wsdl).

An example of a breaking change is this:

Old interface:

MethodA(string arg1, string arg2, string arg3);

New interface:

MethodA(List args);

An example of a non-breaking change (in the .Net world) is:

Old interface:

BankAccount GetAccount(string id);

class BankAccount {

decimal Amount;

}

New interface:

BankAccount GetAccount(string id);

class BankAccount {

decimal Amount;

date ModifiedUTC;

}

If the client has the new version of the Bank Account class, then the date modified field will simply be null. If the server has the new version of the Bank Account class, then the date modified field will be sent across the wire to the client, but the client proxy will simply ignore the additional data within the SOAP packet.

Supporting Side-by-Side Implemetations

Luckily, in the .Net world, when a web service is changed, as long as the changes do not break the contract, then clients do not require updating.

In the Java world, I have been told that the client side app will have to be recompiled based on the new wsdl (I guess Java web service implementations do a full regeneration of the wsdl, and clients always do an xsd validation against the wsdl).

An example of a breaking change is this:

Old interface:

MethodA(string arg1, string arg2, string arg3);

New interface:

MethodA(List

An example of a non-breaking change (in the .Net world) is:

Old interface:

BankAccount GetAccount(string id);

class BankAccount {

decimal Amount;

}

New interface:

BankAccount GetAccount(string id);

class BankAccount {

decimal Amount;

date ModifiedUTC;

}

If the client has the new version of the Bank Account class, then the date modified field will simply be null. If the server has the new version of the Bank Account class, then the date modified field will be sent across the wire to the client, but the client proxy will simply ignore the additional data within the SOAP packet.

Supporting Side-by-Side Implemetations

The good news regarding versioning is that a server can side by side support two versions of web services. However this can get messy.

I guess the golden rule here is to take a leaf out of the book of domain driven design.

Design your services as what they are... plain old objects that really don't care who or what calls them (because you might need to support many different types of interfaces, such as web services, RMI, WCF, WEP, MSMQs, you name it. You might also need to support subtly different interfaces as well, such as CorporateBankManagerService and PersonalBankManagerService (both which call the same bank manager libraries, but have slightly different workflows, API design, etc). So, if the domain is designed correctly, multiple services can be exposed (just as a thin wrapper), and also, running side by side versions isn't a nightmare either.

On the other hand, if your web service *is* your underlying service, well, doing the server side support of multiple versions is just going to get nasty. Perhaps an approach here is to actually have two separate code bases with different namespaces, etc, running side by side on the same box?

Design your services as what they are... plain old objects that really don't care who or what calls them (because you might need to support many different types of interfaces, such as web services, RMI, WCF, WEP, MSMQs, you name it. You might also need to support subtly different interfaces as well, such as CorporateBankManagerService and PersonalBankManagerService (both which call the same bank manager libraries, but have slightly different workflows, API design, etc). So, if the domain is designed correctly, multiple services can be exposed (just as a thin wrapper), and also, running side by side versions isn't a nightmare either.

On the other hand, if your web service *is* your underlying service, well, doing the server side support of multiple versions is just going to get nasty. Perhaps an approach here is to actually have two separate code bases with different namespaces, etc, running side by side on the same box?

On the client side, as long as the new version of the service is there, then porting to it should not normally pose too much of an issue. The catch is that some clients may want a staggered upgrade, where some of the old is called along with some of the new... particularly when there is a chance that a rollback might be required due to an unacceptable bug, design fault, etc discovered in the live new version.

Hosting Multiple Versions of Services

Hosting Multiple Versions of Services

So, how do we support multiple versions of a web service? I like the idea of having web services just as a thin wrapper over the real service... hopefully the underling domain model can support both the old and the new interfaces (with a bit of shoe-horning). Over time, the old version of the web service (well, interface) can be decommissioned, and an even later version can then come into the design/delivery phase.

Other options (in production today) include UDDI facilities for resolving a web service request to a particular running box - the box that serves the correct version of the web service as requested by the client. In a way this is nice... you can technically have exactly the same interfaces between the old and the new version, and the middleware running on the router/instrumentation server will proxy the request to the correct box, based on the IP address of the requesting service (for example). This could also be done through per application/machine configuration too at a guess.

And Now For Something Completely Different...

One other thought that occurred to me was to use web services just as transport mechanism. So, the only thing they publish is the fact that they have a method called "Invoke", along with maybe a parameter list which is a collection of serialised arguments, and the invoke method returns a serialised object which is unmarshalled back into an object at the client side (this is kind of how early versions of RMI worked from memory). This has advantages and disadvantages: on the good side everything is very loosely coupled. There is no concept of interfaces really, you just name a method to be invoked, perhaps a version number, and you get back some data. On the down side, you bypass all type support, schema support, discovery support, security support, etc.

For me, the idea is worth it, as I have never really found all the framework stuff related to web services of any use.

For others, maybe they do, and the idea of reducing web services to a transport mechanism just plain stupid.

For me, the idea is worth it, as I have never really found all the framework stuff related to web services of any use.

For others, maybe they do, and the idea of reducing web services to a transport mechanism just plain stupid.

Labels:

C#,

Java,

software development,

versioning,

web service versioning,

web services

Sunday, April 5, 2009

Language Extensions

Here are a few features that I would like to see built into later versions of C#/Java (actually, two of the ideas below were suggested by a friend, but I figured that I would blog them).

The "Block" Statement

Switch blocks are a very nice way of avoiding complicated nested-if structures.

For example:

Switch (currentCount)

{

case 0:

DoOneThing;

case 1:

DoAnotherThing;

case else:

throw Exception;

}

But this can only be used in situations where the conditions equate to constants (okay, C# allows some lenience, such as supporting strings as well). But, if you need to have quite complicated evaluations of conditionals, then Switch blocks normally don't work.

So we go into "nested-if" land:

if (GetCurrentCount() == 0)

{

DoOneThing;

}

else

{

if (GetCurrentCount() == 1)

{

DoAnotherThing;

}

else

{

throw Exception;

}

}

This can work, but can also get messy, and is prone to bugs.

A workaround is to use some form of block mechanism, for example:

do

{

if (currentCount == 0)

{

DoOneThing;

break;

}

if (currentCount == 1)

{

DoAnotherThing;

break;

}

// currentCount must be an illegal value

throw Exception;

}

while (false)

So, this works, but is a bit messy in terms of a redundant do-while loop (and dangerous if the loop doesn't terminate). A simple language extension could be the "block" statement, as follows:

block

{

if (currentCount == 0)

{

DoOneThing;

break;

}

if (currentCount == 1)

{

DoAnotherThing;

break;

}

// currentCount must be an illegal value

throw Exception;

}

Pre and Post Conditions

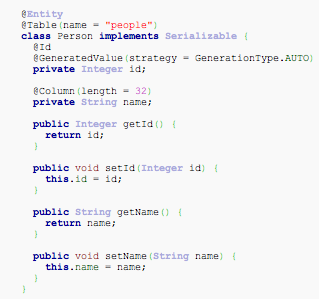

Annotations (or Attributes in C# land) are a useful construct. In Java EE, annotations can be used on domain classes (EJBs) to specify constraints on properties. For example, you can specify that the "age" of a person must be a positive integer up to a value of 100; if this constraint is violated, an exception is raised. Here is an example taken from wikipedia (http://en.wikipedia.org/wiki/Java_annotation):

Following on from this, has anyone seen the Eiffel programming language? Eiffel is fairly funky, and is very focused on correctness of design (which I like!). The language has the concept of pre and post conditions... in other words, it has runtime checks to make sure, for example, that a reference passed in to a method is not void, that the object is in a correct state for the method to be called in the first place, and that after the method has been executed, everything is in order as well. Here is an example taken from wikipedia:

So, how about some form of merger between annotations (C#/Java) and Eiffel's pre/post conditions mechanism? This all comes down to the idea of Design by Contract, which I think is fundamentally a good one (formally specifying what the software should do, with runtime checks to guarantee these assertions). As an aside, language level assertions I feel are great as well, but typically are turned off in production, and are a fairly coarse-grained approach to enforcing design by contract.

The idea here is to have (in modern languages, such as C#/Java), some inbuilt support for pre and post conditions. The logical place for this (for me) seems to be annotations/attributes. Now, I can certainly write custom annotations, but it will require runtime support within the VM as well... any takers out there?

The New Keyword

This is a simple enhancement, just to reduce the amount of coding required on a day to day basis.

In Java/C#, a new object is created as follows:

X myX = new X();

Why can't this be reduced to

X myX = X();

or even

X myX();

To me, it seems like the "new" keyword in this context is redundant.

How About Yours?

Are there any other enhancements that you would like to see in Java/C#? Let me know!

Labels:

C#,

Eiffel,

Java,

Language Extensions,

programming languages,

Software design

Thursday, April 2, 2009

Salary levels

Why do nearly all companies insist that individuals' salary levels must be kept confidential? This has always surprised me, as on the stock exchange (for example), the value of stocks isn't secret. Neither are the annual rich lists, which outline the top income earners (and stockpilers) for the year.

A few years back, a workmate and myself compared our salaries (well outside the work compound of course). I had put forward that we hired this guy (I was responsible for doing the interviewing and hiring of his position... to help me out on the team, which previously only comprised of, well, me really). So, we figured that I would be paid slightly more than him, but hopefully not too much more (our skill levels, experience, etc was broadly comparable). It turned out that he was getting nearly 20% more than me per year. That made both of us feel a bit uncomfortable... I was getting ripped of (it would seem), and now the person that hired him all of a sudden seemed a lot less valuable to the company. The dynamic between us changed needless to say!

The reason for the different starting salaries, as it turned out, is that we both stated what we wanted to work for. My workmate, quite rightly, named a higher starting price, but they went for that figure, no questions asked. I guess I learnt my lesson.

Here is a great article following on from this, of course once again from Joel Spolsky.

Sunday, March 29, 2009

Licensed to Code

Here's an idea: Professional standards for software engineers.

The Concept

I have quite a few friends who are doctors, dentists, etc. They went through hard training at university... just like me. They went through lectures, assignments, exams, and had to give presentations, just like me. However, if they want to practice, they have to continually prove their level of proficiency by way of professional exams. I, however, don't have to. Once I was given my degree at university, that was the end of ever being examined.

I for one would welcome being examined every two, three, four or five years, just to keep myself provably up to date with my field. I would also like to be able to prevent people from giving themselves all sorts of silly titles such as "information architect, solutions architect, enterprise architect, analyst programmer", etc. I for one think that any software developer is an architect, a solution architect, an information architect, a user experience architect, etc.

Certainly, there are areas of specialisation, such as distributed systems, user interfaces, security, algorithms, etc, and there are key industries such as finance, travel, etc. So how about Junior/Senior Software Engineer, with an associated area, such as distributed systems/security? I just don't like the way that people can give themselves any silly title they want, never have to do any professional proficiency verification in their life, and claim to be a professional at the same level as other professions. I personally feel slightly embarrassed when my medical friends talk to me about software engineering roles/titles.

Good Old Job Interviews

As an aside, I am currently interviewing for jobs at the moment. Again, I have to do basic programming tests, along with aptitude tests, IQ tests, psychometric tests (here's a snippet from a four hour one I am about to do - along with some interesting rants), etc, which I am more than happy to do. It proves my worth, and also keeps me up to date with programming practices (I am forced to go back and relearn what I have forgotten prior to the test). However, there are some people (you know who they are) who will spend years and years in a company saying the right things to management and climbing the corporate ladder (see the next section below).

The problem with these people is that unfortunately they will end up being your boss, and they will also be making the design decisions. This is fundamentally bad. If, however, everyone must regularly show their ability, then people who want to position themselves into the title of "enterprise architect", "solutions tsar", etc will only remain in this position if they actually do hold a reasonable basic level of competence. Those who can't can at least then move on to whatever they are more suitable for, such as BA, account manager, strategic manager, etc... whatever suits them the best.

Your Boss Is Incompetent! *

* and you will be too one day.

Here is a sad fact, pointed out to me recently by a colleague. The process of workplace promotions has a slight but fundamental flaw. Let me demonstrate by way of a question: at the end of the following program, what is the value of the variable "Job_Level", presuming that you get promoted from tester (level 1) to developer to architect to delivery manager?

10 Declare Job_Level = 1

20 Do work

30 If it is time to retire or Job_Level >= MAX_JOB_LEVEL_HERE, end program

40 Time goes by

50 If you are really good, Job_Level := Job_Level + 1

60 If you are really bad, Job_Level := Job_Level - 1

70 If you are really really bad, end program

80 Goto 20

I guess the answer is level 4. Great news. You are a delivery manager (hard job... dealing with BAs, developers, architects, stakeholders... and the buck stops with you). However, if you were good at being a delivery manager... would you not have been promoted (over time) to the next level up (say Strategic Director, Board Member, etc)? Yes, you most likely would have, unless you said "actually, I am happy with where I am, and don't wish to climb the ladder any further"). However, if you weren't promoted, this doesn't mean that you were doing a great job... it just means that you weren't doing the job bad enough to get demoted or fired.

So... why are there not conditionals put onto any promotion for all companies, where a probation period is in place for the first few rounds of reviews? That would get around the problem I see a lot - where people position themselves into a nice, easy middle management job, and then just sit there for years, claiming a big salary, making ineffective decisions, pushing their own political agenda, and figuring out exactly why it is that they should never be fired. In small companies this is not so much of an issue, but as companies grow, and relationships across business units become more complex, it is possible to get yourself into a position of power - where no one knows what you are actually doing - but everyone is too afraid to question it :)

The Concept

I have quite a few friends who are doctors, dentists, etc. They went through hard training at university... just like me. They went through lectures, assignments, exams, and had to give presentations, just like me. However, if they want to practice, they have to continually prove their level of proficiency by way of professional exams. I, however, don't have to. Once I was given my degree at university, that was the end of ever being examined.

I for one would welcome being examined every two, three, four or five years, just to keep myself provably up to date with my field. I would also like to be able to prevent people from giving themselves all sorts of silly titles such as "information architect, solutions architect, enterprise architect, analyst programmer", etc. I for one think that any software developer is an architect, a solution architect, an information architect, a user experience architect, etc.

Certainly, there are areas of specialisation, such as distributed systems, user interfaces, security, algorithms, etc, and there are key industries such as finance, travel, etc. So how about Junior/Senior Software Engineer, with an associated area, such as distributed systems/security? I just don't like the way that people can give themselves any silly title they want, never have to do any professional proficiency verification in their life, and claim to be a professional at the same level as other professions. I personally feel slightly embarrassed when my medical friends talk to me about software engineering roles/titles.

Good Old Job Interviews

As an aside, I am currently interviewing for jobs at the moment. Again, I have to do basic programming tests, along with aptitude tests, IQ tests, psychometric tests (here's a snippet from a four hour one I am about to do - along with some interesting rants), etc, which I am more than happy to do. It proves my worth, and also keeps me up to date with programming practices (I am forced to go back and relearn what I have forgotten prior to the test). However, there are some people (you know who they are) who will spend years and years in a company saying the right things to management and climbing the corporate ladder (see the next section below).

The problem with these people is that unfortunately they will end up being your boss, and they will also be making the design decisions. This is fundamentally bad. If, however, everyone must regularly show their ability, then people who want to position themselves into the title of "enterprise architect", "solutions tsar", etc will only remain in this position if they actually do hold a reasonable basic level of competence. Those who can't can at least then move on to whatever they are more suitable for, such as BA, account manager, strategic manager, etc... whatever suits them the best.

Your Boss Is Incompetent! *

* and you will be too one day.

Here is a sad fact, pointed out to me recently by a colleague. The process of workplace promotions has a slight but fundamental flaw. Let me demonstrate by way of a question: at the end of the following program, what is the value of the variable "Job_Level", presuming that you get promoted from tester (level 1) to developer to architect to delivery manager?

10 Declare Job_Level = 1

20 Do work

30 If it is time to retire or Job_Level >= MAX_JOB_LEVEL_HERE, end program

40 Time goes by

50 If you are really good, Job_Level := Job_Level + 1

60 If you are really bad, Job_Level := Job_Level - 1

70 If you are really really bad, end program

80 Goto 20

I guess the answer is level 4. Great news. You are a delivery manager (hard job... dealing with BAs, developers, architects, stakeholders... and the buck stops with you). However, if you were good at being a delivery manager... would you not have been promoted (over time) to the next level up (say Strategic Director, Board Member, etc)? Yes, you most likely would have, unless you said "actually, I am happy with where I am, and don't wish to climb the ladder any further"). However, if you weren't promoted, this doesn't mean that you were doing a great job... it just means that you weren't doing the job bad enough to get demoted or fired.

So... why are there not conditionals put onto any promotion for all companies, where a probation period is in place for the first few rounds of reviews? That would get around the problem I see a lot - where people position themselves into a nice, easy middle management job, and then just sit there for years, claiming a big salary, making ineffective decisions, pushing their own political agenda, and figuring out exactly why it is that they should never be fired. In small companies this is not so much of an issue, but as companies grow, and relationships across business units become more complex, it is possible to get yourself into a position of power - where no one knows what you are actually doing - but everyone is too afraid to question it :)

Sunday, March 22, 2009

How to Develop Good Software

Here are a few thoughts on where we are going right, and wrong, with commercial software development. Enjoy, agree, disagree, make comments, ...

Test driven design (TDD)

Continuous Integration

Test driven design (TDD)

- I really like the concept of TDD. Get the test team in early to think about the proposed software, and to commit that they can in fact test the software to be developed (how often are the QAers bought in right at the end of the project?). One recent project I was on ended up costing about three times the projected amount, as it turned out that we simply couldn't test the software (this software sent updated itineraries to customers based on changes to airline schedules... it worked fine in development, but was hard to test in pre-production as we didn't really have a way of simulating airlines cancelling flights.... except for calling in bomb-threats to airports that is...). Other good things about TDD include: Designing the system for testing - so there are no nasty surprises when it comes time to test. Mock out each component in the system, so you can test each part of the system (including the core) in isolation (unit tests have to run fast... otherwise there is little point in having them).

- Test driven design works, if done well. Martin Fowler suggests taking very small steps (red/green refactoring), but if you are more experienced than this, I suggest taking bigger steps. If you are a competent developer, there is no point writing a test that doesn't compile, just to get to the "red bar" stage. If you know that you need to implement a stub service before any of the tests compile, then do so. This won't break the project, and this keeps your vision on the goal of producing working software. Too often I see very good engineers get bogged down by the religion and process of Test Driven Design/Agile Methods.

- I do however like the idea of writing unit tests that fail first time around (note the difference between failing and simply not compiling). Red-bar (failed) tests the first time round prove that your unit test, in its initial state, will not give you a false positive. This is a lot better than writing unit tests that mistakenly give you a positive result, meaning that the defects will only be found at a later, more expensive stage of development.

- Be careful with getting too carried away with unit tests. Be prepared to throw your unit tests out just as quickly as BAs throw out requirements. It is easy to get quick coverage (the 80/20 rule), but don't spend too long on any one test or part of the system.

- Watch out for over-architecting the test set-ups. If you have a central system for example, that has requests coming in from a UI, makes calls to a web service, and perhaps writes to a database and/or message queue, well, there is already an exponential number of possible test combinations here. The web service, for example can be mocked out on the client side, the server side, you could also use SoapUI to mock both the server and the client, etc. So, a test setup could use a unit test which calls the business layer directly (bypassing the UI), mocks out the web service client, calls the real web service implementation, which in turn writes to a mock database. This is a valid test, which certainly tests the business layer. However, you could also configure the test to call the real web service client, which calls a fake web service implementation. So the real question to ask is "what am I trying to test within this unit test", and then focus on not getting confused between unit testing and integration testing. Integration testing, on the other hand, I see as a superset of unit testing... where you might not want to test for all test cases and inputs, but do want to test each pair of closely related components, as well as a complete (end to end) run of components as well (if that is possible). The only catch with this is that if you are calling third party/high latency/non-controllable services, you probably want to mock these out at the far end of the component if possible.

Continuous Integration

- I am a fan of Continuous Integration. Use something like final builder, set up triggers so that your code is compiled on every check-in, run all your unit tests on every build, have an installer built automatically, and push the installer out to a clean VM on every build as well (make sure that it extracts, installs, and runs correctly). For each company that I have set this up, it has taken a week, and saved a year. One of the most expensive parts of software development is QA, and when QAers don't have a reliable, trustworthy platform to test on, developers don't believe that the bugs are "real bugs"... they often suspect that the QAer has just highlighted an environment setup issue, and often this is the case. It truly surprises me how much money companies are willing to lose in having badly set up/under-resourced QA environments before they finally sort things out and become productive.

- Don't get bogged down by architecture and buzzwords. Nearly every company I have worked for has had some "glory project" where a framework/architecture is designed to revolutionise the way that the company writes code. There are problems with this: architectures that are designed by architects are too complex/abstract. Architectures need to come from developers, based directly on business needs... trust me on this one. Secondly, architectures need to be maintained as technologies are updated/replaced. I have never once seen a proprietary architecture/framework be actually used successfully in a large organisation... they tend to be pipe-dreams that are sold to management but have little useful content for developers (and can actually stifle productivity).

- Don't get carried away with new technologies such as dependency injection/inversion of control, etc. The use of dependency injection is nice, and there are some great frameworks out there (check out SEAM for Java and the Unity application block for .Net), however, the frameworks and related hype can actually far outweigh the actual point of the technique. Dependency injection, for example, is just the concept of assigning objects at runtime... for example, creating an instance of a TextStreamWriter instead of an instance of a database writer, and passing this reference to the consuming component. That's about it. Inversion of control is basically the same concept, but the decision about which instance to initiate is made from another context (perhaps a unit test, a manager class, or even an xml configuration file).

- Don't go crazy with complicated language constructs. Certainly, you can do a lot with annotations, xml configuration, dependency injection, etc etc, and your code will be more elegant and concise, but chances are it is harder to read, took just as long to write, and fewer people will be able to maintain/extend the code. So where is the real gain here?

- Don't micromanage software teams. If you are using Scrum (check out this gem of a scrum parody), then great, but don't let the project manager rule the team (if he/she does, then you don't have Scrum, you have conventional software development). The importance of self organising teams is underrated and overlooked. The problem with micromanagement is that the team ends up working on project planning, estimates, and meetings, rather than actually coding. A scrum master really is just someone to keep the meetings on track, to report externally to the group, and to prioritise next stories in the sprint. There is still very much a role for project management in Scrum... the same role as traditional project managers - to ask if there are any problems/impediments within the team, and to fix these impediments as pragmatically as possible.

- Everyone in a software development group must be professional. This includes the IT support staff, the BAs, the PMs, etc. Without formal, tertiary training in software development, the team is unfortunately reduced to the lowest common denominator. This might sound high and mighty, but software is hard to write and most projects fail. There is a reason for this... software is a hard topic to master, and the risk of failure is higher as the team size increases. Having uneducated people in the team - adopting a "let's just see how this goes" attitude - is a recipe for disaster. I guarantee that teams who design planes or perform surgery all have years of study behind them.

- Be careful of the term "agile". Agile doesn't mean being simplistic, it means doing the simplest most logical thing because the business doesn't know which way it wants to go right now. If you know that the system must have certain performance characteristics, support multi-threading, support a particular set of business functions and/or complex workflows, don't ignore these requirements for the sake of being agile and "taking small steps". Take steps appropriate to your skill level and judgement.

- I am incredibly wary of any technology or process that is hailed as the solution to guarantee the success of a project. A small, competent and fluid team of developers can make nearly anything work using any process or technology, whereas a technology or development process will fail with 100% certainty if the people behind it are not competent. It's all about getting what you paid for.

- Design patterns work. Use them. But don't get sucked in by every pattern book out there. Stick to the highly rated ones (GoF, Fowler's Enterprise Architecture Patterns, etc).

- Stay consistent with your code. Don't use every language construct just because you can. If you do, your code will look bad, and probably be harder to debug.

- Refactoring is useful, but it is always better to write things correctly the first time. This might involve thinking about code before you write it, spending time on design, writing pseudo code, writing prototypes (and then happily throwing them away).

- I like the idea of writing as little code as possible. If there is a way to use existing code, an existing API, etc, then do it. Spend time searching around, play with existing libraries (the Microsoft Application Blocks are great for example), but at some point you will need to call it a day and just write what you need. As long as you write your code in a modular fashion, you should be able to swap it out when someone points out the library that you should have used all along.

- This idea is taken from Joel Spolsky and I like it a lot. Plot your estimated hours to complete a task against anyone who disagrees. Keep track of the records, and then once the task is complete, record the actual hours. Continue doing this for every task. The more you build this up, the more weight you will have when discussing estimates in the future. It often surprises me that BAs and PMs with very little software development experience will argue over how many hours to put into an estimate. I don't argue with my dentist when he tells me that it will cost $400 to remove a tooth, or my lawyer when she tells me that I will need to keep records of all income earned by my family trust. I figure they went to university for a reason, and know better than me. Why are software engineers not given the same respect?

Subscribe to:

Posts (Atom)